Administering a High-Availability Cluster

Several of the Secure Mobile Access appliances include support for clustering two identical appliances behind one virtual IP address: the EX9000, EX7000, and EX6000. A Secure Mobile Access cluster provides high availability by including stateful user authentication, failover, and centralized administration. This system redundancy ensures that an appliance is available even if one of the nodes fails.

This section explains the capabilities of a Secure Mobile Access cluster and describes the required procedures for installing, configuring, and maintaining a cluster. Other topics related to multiple appliances are covered here:

• To increase capacity and support more users, you can cluster up to eight appliances using an external load balancer. For more information, see Configuring a High-Capacity Cluster.

• You can distribute settings to multiple Secure Mobile Access appliances, whether they are separated geographically, or clustered behind an external load balancer. See Replicating Configuration Data for more information.

Related Topics

• Overview: High-Availability Cluster

• Installing and Configuring a Cluster

Overview: High-Availability Cluster

A high-availability cluster is designed to prevent a single point of failure. When you deploy a cluster, you can distribute applications over more than one computer, which avoids unnecessary downtime if a failure occurs. The two-node cluster appears as a single system to users, applications, and the network, while providing a single point of control for administrators.

The EX9000 appliance supports a two-node cluster for up to 20,000 users in a high-availability configuration; the EX7000 appliances support a two-node cluster for up to 5,000 users in a high-availability configuration; a pair of EX6000 appliances can handle up to 250 users.

• Synchronized Cluster Administration

There are two configurations in which you can deploy a Secure Mobile Access cluster—a dual-homed configuration or a single-homed configuration (see Network Architecture for details on these configurations). When deploying a cluster in either configuration, the two appliances must be connected to one another over the cluster network (the backplane). Connecting the cluster interface of one appliance with the cluster interface of the other creates a private network over which the two cluster nodes can communicate (for state synchronization and credential sharing).

Regardless of the deployment scenario, the following connectivity requirements apply:

• To communicate with internal resources, both nodes in the cluster must be connected to the network switch facing the intranet (this would be the same as the external switch in a single-homed configuration).

• To provide redundancy, both nodes in the cluster must be connected to the network switch facing the internet.

• Both cluster nodes must be connected to each other using the cluster interface.

In a dual-homed configuration, the cluster architecture would look like this:

In a single-homed configuration, the cluster architecture would look like this:

Note The network tunnel service is not available on a single-interface configuration.

The internal monitoring service detects node failure and performs failover to the Standby node. All subsequent traffic will be handled by the newly anointed Active node.

The cluster provides stateful user authentication failover (authentication credentials are shared in real time in a memory cache shared by all nodes). Because both nodes are synchronized, they are capable of handling the failover of connections initiated by the active node without requiring the user to reauthenticate.

The cluster does not provide stateful application session failover. Disruption to users depends on the TCP/IP disconnect tolerance of the applications that they are using at the time the failover occurs.

Synchronized Cluster Administration

You administer nodes of a Secure Mobile Access cluster from one master AMC. During initial setup of an appliance, you assigned one appliance to be the Active node and the other the Standby node.

After installing the software on both nodes, you log in to AMC on the master node. From that point on, this node controls the propagation and synchronization of policy and configuration across both nodes. If you log in to AMC on the slave node, its Node Administration page appears, displaying a message telling you that it is not the master node (it also displays status information about the services on the slave node).

Each node in the cluster hosts configuration and policy data stores. The master node, which communicates with the slave node through the cluster interface, synchronizes this data as follows:

• When you add a second node to a cluster, the master node provisions the new node with configuration data, after which the new node starts up its access services.

• When you apply a configuration change on the master node, AMC propagates the change to the slave node. This remains true even if you are using replication to distribute configuration across a number of appliances. The HA cluster is identified in logs and in AMC UI by its cluster name.

Note The slave (Standby) node provides a redundant AMC that promotes itself to the master (Active) node if the original master node fails. Once a node becomes Active, it remains in that role until you manually switch the role (or reboot it).

.

CAUTION If you are upgrading the software on the master node, do not designate the slave node as the master while the original master node is down. Doing so prevents the upgraded node from running: it would be rejected by the other node because of a version mismatch. Restoring the full cluster requires downtime for your users while you upgrade the second node.

For a step-by-step description of what happens when a master node fails, see Master (AMC) Node Fails. For detailed procedures on how to manage the cluster using the master AMC, see Managing the Cluster.

Note Administering a high-availability cluster differs from replication, where you define a “collection” of appliances that will have settings in common and that will operate in peer-to-peer mode. See Replicating Configuration Data for more information.

Installing and Configuring a Cluster

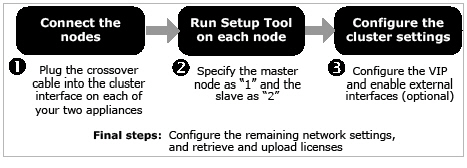

Installing a Secure Mobile Access cluster requires the following steps:

• Rack-mount both appliances

• Connect the cluster interface between the two appliances

• Run Setup Tool on each appliance

• Configure the cluster’s virtual IP address (VIP)

The following diagram illustrates the work flow:

Before installing and configuring your Secure Mobile Access cluster, it is helpful to gather the following information:

IP Addresses for a Dual-Homed Installation

• Four IP addresses on the same subnet, one for each node’s internal and external. The internal and external addresses should be on separate subnets.

• One virtual IP address (VIP) for the cluster. This VIP serves as the single external address for the entire cluster and must be on the same subnet as the external interface.

• If you are using the network tunnel service in a clustered environment, you need an internal IP address for the network tunnel service. This serves as a back-connect address for the entire cluster and must be on the same subnet as the internal interface.

IP Addresses for a Single-Homed Installation

• Two IP addresses on the same subnet, one for each node’s internal interface.

• One virtual IP address (VIP) for the cluster. The VIP serves as the single external address for the entire cluster and must be on the same subnet as the internal interface.

Related Topics

• Step 1: Connect the Cluster Network

• Step 2: Run Setup Tool on All Nodes of the Cluster

• Step 3: Configure the Cluster’s External Virtual IP Address

• Final Steps: Network Configuration and Licensing

Step 1: Connect the Cluster Network

Before running Setup Tool, you must connect the cluster network between the two nodes of the cluster.

1. Find the network crossover cable supplied with the appliance.

2. Use this cable to connect the cluster interface on one appliance with the cluster interface on the other appliance. See Connecting the Appliance.

CAUTION The two nodes of the cluster network must be connected using the crossover cable provided with the appliance. Do not substitute any other cabling. Do not place any other network hardware devices—such as a router or a switch—between the two nodes.

Step 2: Run Setup Tool on All Nodes of the Cluster

Setup Tool is a command-line utility that performs the initial network setup for an appliance. Details about running this tool in a single-node environment are explained in Next Steps. When installing a cluster, you should run Setup Tool on one appliance, and then repeat the process on the second appliance; review Tips for Working with Setup Tool before continuing.

1. Make a serial connection to one appliance in the cluster, confirm that the cluster network between the two nodes is connected, and then turn on one appliance using the power button on the front panel. Setup Tool automatically runs on the appliance (you can also invoke it by typing setup_tool).

2. When you’re prompted to log in, type “root” for the username.

3. You’re prompted to type an IP address, subnet mask, and (optionally) a gateway for the internal interface. You’ll use this interface to connect to the appliance from a Web browser and continue setup using AMC.

a.Type an IP address for the internal interface connected to your internal (or private) network and then press Enter.

b.Type a subnet mask for the internal network interface and then press Enter.

c.If the computer from which you’ll access AMC is on a different network than the appliance, you must specify a gateway. Type the IP address of the gateway used to route traffic to the appliance and then press Enter.

If you’re accessing AMC from the same network on which the appliance is located, simply press Enter.

4. You’re prompted to review the information you provided. Press Enter to accept the current value, or type a new value.

5. You are prompted to indicate whether this node will be part of a cluster. Type y and then press Enter to continue.

6. Specify whether the current appliance is the master node (“1”) or slave node (“2”), and then press Enter.

The cluster subnet and IP address are automatically assigned.

7. You’re prompted to review the information you provided. Press Enter to accept the current value, or type a new value.

8. You’re prompted to save and apply your changes. Press Enter to save your changes.

Setup Tool saves your changes and restarts the necessary services. It also generates Secure Shell (SSH) keys using the information you provided. During this time you will receive minimal feedback; be patient and do not assume that Setup Tool is not responding.

When the changes are complete, a message appears indicating that the initial setup is complete.

9. After running Setup Tool on the first node, repeat the process for the second node.

Step 3: Configure the Cluster’s External Virtual IP Address

The cluster’s virtual IP address serves as the single external address for the entire cluster.

1. Log in to AMC on the node that you want to be the master.

2. From the main navigation page, click Network Settings.

3. Click the Edit link in the Basic area. The Configure Basic Network Settings page appears.

4. In the Cluster configuration area, type the cluster’s Virtual IP address. This must be on the same subnet as the IP address for the interface connected to the Internet (internal interface in a single-homed configuration, external interface in a dual-homed configuration).

If you are using the network tunnel service in a clustered environment, type the virtual IP address in the Network tunnel service virtual IP address box.

This serves as the single internal address of the network tunnel service for the entire cluster. This VIP must be on the same subnet as the internal interface. This setting applies only to a dual-homed cluster.

Final Steps: Network Configuration and Licensing

When the software is installed on both nodes and one AMC is serving as the master management console, you are ready to complete the remaining configuration tasks. There is little difference between configuring a single-node appliance and a cluster. The following are the primary differences when configuring a cluster:

• Both nodes of the cluster appear on the Network Settings page of AMC. In a single-node environment, only one node appears here.

• The Cluster interface settings area that you used in the previous step is displayed. This area is not present in a single-node deployment.

• On the Network Interface Configuration page, you cannot edit the name of the appliance, which is actually the node ID. In a single-node deployment you can edit the appliance name.

• If you plan to enable the external interfaces, you must enable them for both nodes at the same time. AMC prevents you from applying the configuration change until you have enabled the interface for both nodes.

Continue with your cluster configuration:

1. Refer to Managing Licenses for information on retrieving your license file from MySonicwall.com and importing it to the appliance. You must download a separate license for each appliance.

2. Refer to Administering a High-Availability Cluster for information on specifying network interfaces, routing modes, gateways, and so on.

You can perform most cluster management tasks from a single AMC (the one that has been designated as the master). The procedures in this section provide details on how to manage your cluster.

• Viewing and Configuring Network Information for Each Node

• Starting and Stopping Services

• Performing Maintenance on a Cluster

Viewing and Configuring Network Information for Each Node

From the master AMC, you can view and configure network interface settings for both nodes in the cluster.

To view network interface configuration settings

1. From the main navigation page, click Network Settings. The Basic area gives you an overview of the cluster: the node names, default domain, and addresses.

2. Click the Edit link in the Basic area. The Configure Basic Network Settings page appears

3. The table in the Cluster nodes area displays information about each of the two nodes, which you can edit on this page. For details on these network settings, see Configuring Network Interfaces.

When powering up both nodes in a cluster, it does not matter which node you power up first.

Starting and Stopping Services

You use the master node to start and stop services in a cluster environment. For details on how to do this, see Stopping and Starting the Secure Mobile Access Services. You cannot control a service on a single node of the cluster, however. When you start or stop a service, the service starts or stops on both nodes of the cluster. To stop services on a single node of the cluster, you have to power down the appliance itself.

Note The Status column on the master Services page indicates the status of the services on the master node only. See Monitoring a Cluster for more information on monitoring a slave node.

From the master node, you can monitor only the services running on the master node.

To view the status of services on the master node

1. From the main navigation menu, click Services.

2. In the Access services area, view the Status indicators for each service. The Stop/Start value shows the status of the service on the master node.

To view the status of services on the slave node, you must log in to AMC on that node.

To view the status of services on the slave node

3. Log in to AMC. The Slave Node Administration page appears.

This page provides standby node information in addition to the timestamp of the last cluster synchronization.

5. To see the most current statistics, click the Refresh button or set the Auto-refresh option to On.

6. From the Standby Node Dashboard page you can perform the following functions:

– Start and stop services:

– View Logs

– Maintenance

7. To manage the cluster, click the link for the Active node in the top right pane of the page.

Note In a clustered environment, the active user count displayed on the AMC home page and on the System Status page is limited to users on the current node, while the count shown on the User Sessions page includes active users in the entire cluster.

To back up configuration data for a cluster, run Backup Tool on just the master node; this backs up configuration data for both nodes. For details on running Backup Tool, see Saving and Restoring Configuration Data.

Performing Maintenance on a Cluster

To perform maintenance on one or both nodes of a cluster, stop services on both nodes. You should plan all maintenance activities during a time that is least disruptive to users. See Starting and Stopping Services for details.

You can use AMC to install version upgrades and hotfixes. To update the Secure Mobile Access software in a cluster environment, you must install the system upgrade or hotfix and import the license file to each node of the cluster. The order in which you update the nodes in the cluster is very important: begin the process with the master node, and then proceed to the slave node. There may be some disruption to service when performing the update, so schedule it during a maintenance window.

Before you perform an upgrade, make a backup of your current configuration data. See Managing Configuration Data for details.

To upgrade a cluster

1. Log in to AMC on both the slave and master nodes. You can do this from one computer and have both AMC windows open at the same time, side by side.

2. Log in to AMC on the first node and then, from the main navigation menu in AMC, click Maintenance. Install your upgrade following the steps described in Installing System Updates.

3. Install the upgrade on the second node following the same installation steps.

4. Make sure that the update succeeded by verifying that the version number is correct in AMC on both nodes.

Note•: After performing an upgrade, users may need to reauthenticate; new connections are not affected.

• If you want to roll back a cluster version, you must begin with the master node and follow the steps in Rolling Back to a Previous Version.

This section explains how to troubleshoot various cluster environment situations.

• Avoiding Delays in Service if a Failover Occurs

Avoiding Delays in Service if a Failover Occurs

When a node transitions from STANDBY to ACTIVE or vice versa, its interfaces are briefly disabled as the switch checks to see if its ports are connected to another switch. You can configure your switch to skip these checks by enabling spanning-tree fast port negotiation. On Cisco switches, this functionality is known as PortFast.

When configuring a cluster, one or more of the following messages may appear in AMC:

|

This section provides a step-by-step view of how the cluster responds to various situations.

If both nodes are functioning properly, traffic flows through a cluster in the following way:

1. An incoming request is routed to the cluster’s virtual IP address, which is handled by the HA Active node.

HA Active Node Fails or Goes Offline

This scenario describes what happens when the HA Active node fails or goes offline.

1. The HA Active node (Node 1) fails or goes offline.

2. The HA Standby node (Node 2) promotes itself as the new HA Active node and prompts the Administrator to be assigned as the new AMC Active node.

3. When the original HA Active node (Node 1) comes back online, it communicates with the current HA Active node (Node 2) and determines that Node 2 is the current HA Active node. Node 1 then demotes itself to the HA Standby node.

4. The current HA Active node (Node 2) synchronizes its state with the new HA Standby/ node (Node 1).

The following scenarios detail what happens if a cluster node fails. In most failure situations, the appliance will not require users to reauthenticate and existing user connections will continue operating normally. Service may be interrupted, however, if a particular application requires a user to reauthenticate.

This scenario describes what happens in AMC when the master node fails.

1. The master node fails.

2. The slave node promotes itself to the master node.

3. The original master comes back online, communicates with the other node, and determines that the other node is now master. The original master demotes itself to slave.

4. The new master synchronizes its state with the new slave node’s state.

If the cluster network fails, the two nodes of the cluster are no longer able to communicate with each other.

1. The master AMC detects that the cluster network is down. It can no longer communicate with the slave node.

Note A failed cluster network is the likely problem if the slave node is running but the master node cannot communicate with it.